The Signal Beneath the Noise: Why AI Needs a Human Lens

We're drowning in AI hype. It's time to find the signal. 📡

Artificial intelligence is everywhere—recommending your next song, grading student essays, analyzing cancer scans. But here's what the breathless headlines miss: the real challenge isn't building smarter AI. It's building AI that makes us smarter. 🧠

At FutureSignal.tech, we cut through the noise. No inflated promises. No doomsday prophecies. Just the authentic breakthroughs that matter—and the human questions they raise.

🚀 What's Actually Changing Right Now

Two systems emerged in 2024 that fundamentally shift how AI understands the world:

Google's Gemini 2.0 doesn't just read text—it thinks across images, audio, video, and code simultaneously. Ask it to analyze a medical chart while reviewing the doctor's notes and patient X-rays, and it connects the dots in real time. 🔗

Meta's Llama 3.1 broke open the AI monopoly. With 405 billion parameters and full open-source access, it matches GPT-4's performance at half the cost. More importantly, it puts cutting-edge AI in the hands of researchers, startups, and innovators who couldn't afford it before. 🔓

This isn't incremental. This is perception reimagined.

⚙️ Under the Hood: What Makes These Systems Different

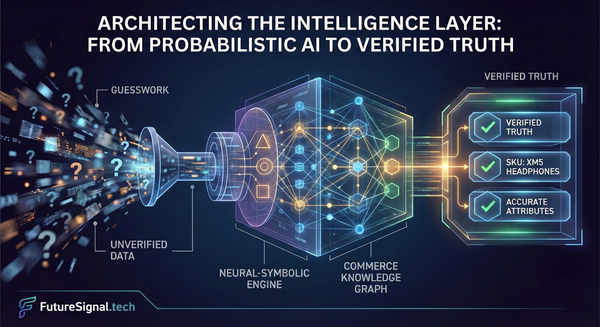

🔮 Gemini 2.0: When AI Sees the Whole Picture

Most AI reads one thing at a time. Gemini processes everything at once.

The breakthrough: Cross-modal attention layers let it understand how a chart relates to a paragraph, how a voice tone changes meaning, how visual context reshapes text. It's not stitching together separate analyses—it's thinking multimodally from the ground up.

The scale: Context windows up to 1 million tokens 📊. That's not a typo. Feed it an entire medical history, a full codebase, or a 300-page legal brief. It holds the whole thing in working memory.

The guardrails: Built-in explainability APIs show its reasoning. Hallucination detection flags uncertain outputs ⚠️. Third-party audit tools let anyone verify what's under the hood.

The reach: Optimized for mobile and edge devices 📱. This isn't just cloud computing—it's intelligence that runs on your phone.

🌐 Llama 3.1: Intelligence Without Gatekeepers

Meta made a bold bet: give the technology away and let the world make it better.

Why it matters:

- ✅ Matches closed models in math, coding, and language understanding

- ✅ 128,000-token context handles complex conversations without forgetting

- ✅ Three sizes (8B, 70B, 405B parameters) for everything from laptops to data centers

- ✅ Open weights mean complete transparency and customization

The result: A vibrant ecosystem. Researchers fine-tune it for medical diagnosis. Startups adapt it for legal research. Artists train it on niche creative styles. The community moves faster than any single company could. 🏃♂️💨

"Open-source isn't just cheaper—it's safer. When everyone can inspect the system, problems get caught before they scale." 🔍

— AI Ethics Researcher

🌍 Where It's Actually Working

📚 Education: Beyond the Buzzword

The promise: Personalized learning for every student.

The reality: It's happening, but not how you'd think.

The University of Sydney deployed AI content personalization across 40,000 students. Results? Measurably higher performance 📈 across demographics—especially for students who traditionally struggled.

UK teachers using AI planning tools? Saving five hours weekly ⏰. That's not automation replacing jobs. That's teachers spending less time on lesson plans and more time with students who need help.

The catch: These systems are teaching assistants, not teachers. The magic happens when AI handles repetition and diagnosis while humans provide motivation, context, and connection. 🤝

🏥 Healthcare: Faster, Safer Diagnosis

Gemini-powered platforms now integrate:

- 🩺 Radiology images

- 🧪 Lab results

- 📋 Clinical notes

- 🧬 Genetic data

In one analysis. In real time.

Stanford Medical Center reported 23% faster diagnosis ⚡ for complex cases and 14% fewer errors when AI-assisted review became standard protocol.

Medical students training with AI simulators complete 3x more practice scenarios than traditional methods allow. They graduate more confident, more capable, more prepared. 💪

The AI isn't replacing doctors. It's giving them superpowers.

🌍 Climate: Intelligence Where It Counts

Satellite imagery 🛰️ + ground sensors + weather models 🌦️ + community reports = AI that predicts disasters before they peak.

California fire departments now use multimodal AI for 72-hour wildfire forecasting 🔥. Evacuation orders go out earlier. Lives get saved.

Energy grids optimized by AI reduce waste by 18-22% ⚡ by predicting demand spikes and routing renewable power more efficiently.

This is computation with consequences. 🌱

🎨 Creativity: New Tools, Not Replacements

Architects use AI to generate thousands of building design variants 🏗️ in hours, testing for energy efficiency, cost, and aesthetics simultaneously.

Film storyboard artists iterate concepts in real time 🎬, exploring visual ideas at the speed of thought.

Code reviewers get instant feedback in eight languages 💻, catching bugs before they ship.

The pattern? AI amplifies human creativity—it doesn't replace it. The vision still comes from us. 💡

⚖️ The Ethics Question We Can't Ignore

Here's the uncomfortable truth: who controls AI defines what AI values.

Gemini includes explainability APIs and audit tools. Llama is fully open for inspection. But transparency alone isn't enough.

The real questions:

- ❓ Who decides what's "harmful" content?

- ❓ How do we prevent bias baked into training data from perpetuating discrimination?

- ❓ What happens when AI systems optimize for engagement over truth?

The shift toward open models, rigorous benchmarking, and collaborative oversight isn't just technical—it's cultural. We're learning that trustworthy AI requires:

✅ Diverse teams building it

✅ Independent audits testing it

✅ Public accountability for when it fails

✅ Governance that evolves as fast as the technology

We're not there yet. But the conversation is finally starting. 🗣️

🔭 What Comes Next

AI isn't going to replace human intelligence. But it will redefine what human intelligence can accomplish.

The question isn't whether AI will change everything—it already has. The question is whether we'll build it to amplify our best qualities or accelerate our worst.

That's still a human job. AI just helps us do it better. 🎯

📡 Decoding Complexity, Amplifying Signal

At FutureSignal.tech, intelligence is a living dialogue—not just numbers or benchmarks, but understanding.

We don't chase hype cycles. We don't peddle techno-utopianism. Instead, we do the harder work: untangling complexity, spotlights authentic breakthroughs, and fostering genuine conversations about the ethics, dilemmas, and human stories that shape our technological future.

Every article you'll find here is built on three principles:

🔍 Evidence over excitement

We spotlight advances backed by transparent benchmarks, peer-reviewed research, and real-world impact—not press releases and promises.

🧠 Context over hype

Technology doesn't exist in a vacuum. We explore how AI intersects with policy, culture, economics, and the messy reality of human organizations.

💬 Dialogue over monologue

The best insights emerge from conversation. We're not here to lecture—we're here to think alongside you.

🚀 Join the Community

By subscribing to FutureSignal.tech, you're not just getting articles. You're joining a community of thinkers, builders, and dreamers who see AI not as a tool, but as a lens for understanding the future. 🔮

What you get:

📚 Curated archive access – Deep-dives, case studies, and technical breakdowns worth revisiting

📬 Exclusive insights – Analysis and perspectives delivered straight to your inbox

🤝 Engaged community – Connect with people asking the same hard questions you are

🎯 Signal, not noise – We respect your time. Every word earns its place.

This is for you if:

- ✨ You're tired of AI coverage that's all promises and no proof

- 🎓 You want to understand the technology shaping the future—not just the talking points

- 💭 You believe that intelligence, real intelligence, requires wisdom as much as computation

- 🚦 You're ready to move past "AI will save/destroy us" and into "here's what's actually happening"

💡 Let's Find the Signal—Together

The future isn't written in code alone. It's written in the choices we make about how to use that code, who gets to build it, and what values we encode into the systems that increasingly shape our world.

No hype. No noise. Just signal. 📡

Subscribe Now – It's Free → ✉️

📖 Sources & Further Reading

- Google DeepMind (2024). Gemini 2.0: Technical Report

- Meta AI (2024). Llama 3.1: Open Foundation Models

- University of Sydney (2024). AI Personalization in Higher Education: Outcomes Report

- UK Department of Education (2024). AI in Schools: Teacher Workload and Student Outcomes Study

- Stanford Medical Center (2024). AI-Assisted Diagnosis: A Retrospective Efficacy Analysis

- California Fire Department (2024). Multimodal AI for Wildfire Prediction: Pilot Results