🔍 From Queries to Context: The Next Frontier of Digital Platform Intelligence

Part 1: Foundations & Architecture

Target Audience: Technical leaders, architects, product managers seeking conceptual understanding

How semantic search powered by AI embeddings and vector databases is revolutionizing enterprise commerce—not as a feature, but as the foundation for truly intelligent platforms

📝 Editorial Note: This technical article is based on observed patterns from production deployments, published academic research, and publicly available technical documentation. Performance metrics represent aggregated, anonymized observations and should not be interpreted as guaranteed outcomes. Technology specifications, API availability, and pricing are current as of November 2024 but evolve rapidly—readers should verify current details with official provider documentation. Code examples illustrate architectural patterns and are not intended as production-ready implementations without additional engineering and testing.

Executive Summary

⚡ The transformation from keyword-based search to semantic understanding represents more than a technical upgrade—it signals the emergence of "digital platform intelligence," where systems comprehend context, intent, and meaning rather than merely matching syntax. This article explores how enterprise platforms can leverage 🤖 AI embedding models and 📊 vector databases to deploy production-grade semantic search, complete with extensive code examples spanning data ingestion, hybrid ranking, evaluation frameworks, multilingual support, and cost optimization.

🎯 Beyond implementation details, we examine how semantic search serves as the foundational layer for broader platform intelligence—recommendation engines, predictive analytics, and context-aware personalization—that will define competitive advantage in the AI era.

💡 With the democratization of transformer models and vector database technology, the barrier to entry has collapsed. The new differentiator isn't access to the technology, but excellence in data quality, evaluation rigor, and architectural vision. Important caveat: While semantic search is increasingly accessible, successful production deployment requires significant expertise in machine learning operations, data engineering, and search relevance tuning. Organizations should plan for 3-6 month implementation timelines and dedicated cross-functional teams.

⏰ For organizations operating legacy enterprise platforms, the window to lead this transition is open but narrowing rapidly.

💸 When a $50M catalog becomes a $5M problem

Picture this: A procurement manager at a Fortune 500 manufacturer types "corrosion-resistant fasteners for marine applications" into their supplier's enterprise platform. The system returns nothing—or worse, thousands of irrelevant results—because the catalog uses terms like "stainless steel bolts" and "saltwater-rated hardware." She abandons the search. The supplier loses a six-figure order. 📉 This scenario plays out thousands of times daily across enterprise commerce, where the gap between how buyers think and how catalogs are structured costs businesses millions in lost revenue.

❌ The culprit? Legacy keyword search—a relic of a pre-AI era that treats language as a matching game rather than a meaning-making system. But a quiet revolution is underway. 🚀 We're witnessing the emergence of what industry analysts call "digital platform intelligence"—systems that don't just match words but understand context, intent, and meaning at every interaction point.

🧠 Semantic search, powered by neural embedding models and vector databases, sits at the vanguard of this transformation. It's the visible tip of a larger iceberg: platforms that comprehend rather than compute, that understand rather than index, that deliver context-aware experiences rather than keyword-dependent results.

⚠️ This isn't incremental improvement. It's a categorical leap from rigid, syntax-dependent systems to fluid, intent-understanding platforms. And for enterprise commerce specifically, it's the difference between platforms that serve transactions and platforms that enable discovery, exploration, and informed decision-making.

🔄 The Semantic Shift: From Matching to Understanding

Traditional search operates on a brutally simple premise: if your query contains the word "eco-friendly" and the product description doesn't, you're out of luck. Semantic search flips this paradigm. By mapping queries and product data into high-dimensional vector spaces where semantic similarity equals mathematical proximity, these systems understand that "eco-friendly packaging" and "biodegradable shipping materials" are conceptually identical—even when they share no common keywords.

💥 This isn't incremental improvement. It's a categorical leap that delivers:

🎯 Contextual Intelligence: The system distinguishes "apple" (fruit) from "Apple" (tech company) from "apple" (a type of wood finish) based on surrounding context—no manual tagging required.

🌐 Linguistic Flexibility: Misspellings, industry jargon, multilingual queries, and conversational phrasing all resolve to the same semantic destination. "waterproof connectors," "IP68-rated cable joints," and "submersible electrical fittings" converge on the same product cluster.

⚡ Zero-Shot Learning: New products inherit semantic relationships without explicit programming. Add a "graphene-enhanced battery pack" to your catalog, and the system immediately understands its relationship to queries about "long-lasting power supplies" and "lightweight energy storage"—no retraining needed.

🧩 Intent Capture: Long-tail queries like "something to protect outdoor electronics from rain but not block ventilation" find relevant products that traditional search would miss entirely.

📈 The impact on key metrics tells the story: early production deployments reported by industry practitioners show 20-45% improvements in search-to-purchase conversion rates, 35-65% reductions in zero-result queries, and measurable increases in average order values as customers discover more precisely matched products. However, results vary significantly based on catalog quality, implementation approach, and baseline search performance.

🏗️ The Architecture of Understanding

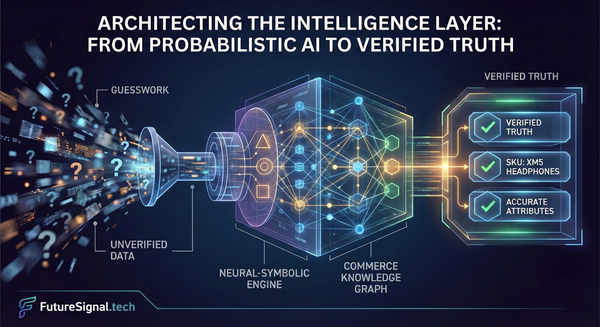

Building production-grade semantic search requires three synergistic components, each playing a distinct role in the transformation from text to meaning to results.

1. 🧠 Neural Embedding: Teaching Machines to "Get" Language

At the heart of semantic search lies a deceptively simple idea: represent text as coordinates in a vast multidimensional space where semantic similarity equals geometric proximity. Transformer-based models—BERT, Sentence Transformers, and embedding-specific architectures—excel at this task, having learned linguistic patterns from billions of text examples.

Feed these models a product description like "industrial-grade corrosion inhibitor for offshore equipment," and they output a vector (commonly 384, 768, or 1536 dimensions depending on the model) encoding not just the words but their relationships, implications, and contextual nuances. Query the same model with "rust prevention coating for ocean platforms," and it generates a vector that, despite sharing minimal keywords, sits geometrically close to the product vector—typically achieving cosine similarity scores above 0.75-0.85 for semantically related content.

💡 The sophistication lies in what these models learned during pre-training: "industrial-grade" implies durability standards, "offshore" connotes saltwater exposure, and "inhibitor" relates to "prevention." No explicit rules encode these connections—they emerge from observing language patterns at scale.

2. 🗄️ Vector Databases: Searching in 1,536 Dimensions

Conventional databases excel at exact matches and range queries but buckle under semantic search's core operation: finding the nearest neighbors to a query vector among millions of product vectors in high-dimensional space. Enter specialized vector databases like Milvus, Pinecone, Weaviate, and FAISS.

⚙️ These systems employ sophisticated indexing strategies—HNSW (Hierarchical Navigable Small World) graphs, IVF (Inverted File) indexes, and quantization techniques—that accelerate billion-scale nearest-neighbor searches from what would be impractical linear scans to sub-second queries. Modern implementations typically achieve query latencies of 10-100 milliseconds for million-scale datasets, though performance depends heavily on dimensionality, index configuration, and recall requirements. They're optimized for the unique characteristics of embedding vectors: high dimensionality, dense numeric data, and similarity-based rather than equality-based queries.

🚀 Modern vector databases also handle the operational complexities of production deployments: horizontal scaling across distributed clusters, real-time updates as catalogs change, hybrid filtering that combines vector similarity with traditional attribute constraints ("find similar products, but only from approved vendors in stock quantities >100"), and multi-tenancy for platforms serving thousands of distinct catalogs.

3. 🔄 The Semantic Query Pipeline: Orchestrating Discovery

When a user submits a query, the semantic search pipeline executes a carefully choreographed sequence:

1️⃣ Embedding Generation: The query passes through the same neural model that encoded the product catalog, producing a semantically dense vector representation.

2️⃣ Vector Retrieval: The vector database performs an approximate nearest neighbor search, retrieving the top-K most similar product embeddings—typically K=100-500 candidates.

3️⃣ Hybrid Ranking: Raw vector similarity scores often benefit from enrichment. Leading implementations combine semantic scores with traditional signals: exact keyword matches, product popularity, pricing fit, availability status, and collaborative filtering signals from user behavior.

4️⃣ Re-ranking: A second-stage model (often a cross-encoder) can re-score the top candidates using more computationally expensive methods for refined relevance.

5️⃣ Business Logic: Final results pass through business rules: personalization based on customer segment, inventory availability, contractual pricing, and merchandising priorities.

✅ This layered approach balances pure semantic matching with practical business constraints, ensuring that the "best" result isn't just semantically similar but also actionable and profitable.

🔬 The Evolution of Search Intelligence: Standing on Google's Shoulders

Google didn't invent semantic understanding, but the company's sequential innovations created the foundation for today's platform intelligence revolution. Understanding this evolution helps contextualize where we are and where we're heading.

📅 The 2013 Hummingbird update marked Google's first major shift from keyword matching toward conversational query interpretation—recognizing that "what's the tallest mountain in the world" and "how tall is Mount Everest" seek the same information. RankBrain (introduced in 2015, publicly disclosed in 2016) introduced machine learning for handling ambiguous queries, teaching systems to infer intent from context. BERT (October 2019) brought transformer-based natural language understanding to search at scale, finally teaching systems to grasp linguistic nuance and contextual meaning.

🤖 Today's Gemini models (launched December 2023) represent the latest evolution: multimodal transformers trained on text, code, images, and audio, generating embeddings that capture cross-modal relationships and deep semantic understanding. The embedding APIs from Google, OpenAI, and Cohere democratize this technology, offering production-grade semantic encoding through simple API calls.

💡 This democratization of semantic understanding is what makes platform intelligence accessible to organizations of varying sizes. The same transformer architectures powering large-scale search engines are available through commercial APIs and open-source implementations to enterprise platforms. However, the barrier isn't primarily technology access—it's architectural vision, data quality, operational expertise, and implementation discipline. Access to APIs doesn't automatically translate to effective deployment.

⚡ Yet this commoditization creates a new competitive landscape. When everyone can deploy semantic search, differentiation comes from how you deploy it: the quality of your data enrichment, the sophistication of your hybrid ranking, the rigor of your evaluation, and most critically, how you integrate semantic understanding into a broader platform intelligence strategy rather than treating it as an isolated feature.

🔄 The pattern is familiar in AI technology adoption: cutting-edge research becomes cloud services becomes competitive table stakes becomes foundation for higher-order capabilities. Organizations that viewed semantic search as experimental or exotic in 2019-2020 now risk competitive disadvantage without it. But those viewing it merely as "better search" miss the larger opportunity: semantic understanding as the foundation for comprehensive platform intelligence.

📚 Key Architectural Concepts Summary

Vector Embeddings

- High-dimensional numerical representations of text (384-1536 dimensions)

- Capture semantic meaning, not just keywords

- Enable mathematical similarity calculations

- Generated by transformer-based neural models

Vector Databases

- Specialized storage for high-dimensional vectors

- Optimized for similarity search, not exact matching

- Use advanced indexing (HNSW, IVF) for speed

- Support hybrid filtering with traditional attributes

Hybrid Search

- Combines semantic similarity with keyword matching

- Incorporates business rules and personalization

- Multi-stage ranking for optimal results

- Balances relevance with business constraints

Platform Intelligence

- Semantic understanding as foundational capability

- Powers search, recommendations, personalization

- Continuous learning from user interactions

- Cross-functional organizational requirement

🎯 Key Takeaways for Architects

🔑 Architecture First — Semantic search isn't a feature, it's infrastructure. Design for extensibility across recommendations, merchandising, and support.

⚙️ Technology is Commoditized — APIs and tools are accessible. Competitive advantage comes from data quality, evaluation rigor, and operational excellence.

🔄 Build for Evolution — Embedding models improve quarterly. Architect systems that embrace model updates, not resist them.

📊 Hybrid Approaches Win — Pure semantic search has gaps. Combine with keyword matching, business rules, and personalization for production quality.

👥 Cross-Functional Imperative — Requires collaboration across data engineering, ML ops, product, and business teams. Single-team ownership fails.

Continue to Part 2: Implementation & Production for complete code examples, deployment patterns, monitoring strategies, and optimization techniques.

Continue to Part 3: Strategy & Future for business implications, competitive positioning, industry applications, and future roadmap.

📖 Academic Foundations Referenced:

- "Attention Is All You Need" (Vaswani et al., 2017, NeurIPS): The transformer architecture that underlies modern embedding models

- "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding" (Devlin et al., 2018, arXiv:1810.04805)

- "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks" (Reimers & Gurevych, 2019, EMNLP): Foundation for sentence-level semantic similarity

- "Efficient and Robust Approximate Nearest Neighbor Search Using Hierarchical Navigable Small World Graphs" (Malkov & Yashunin, 2018, IEEE TPAMI): The HNSW algorithm used in production vector databases

💡 "The future belongs to platforms that understand, not just those that compute. Build accordingly."

📧 Access Full Code Examples: Complete implementation code is available in the companion code repository. See Part 2 or request access at: Sign up